A composer, a professor, and a computer walk into a bar...

… and have a contest about who writes the best music! Who wins? Read on to find out.

Audience: you! Especially if you’re interested in AI and music

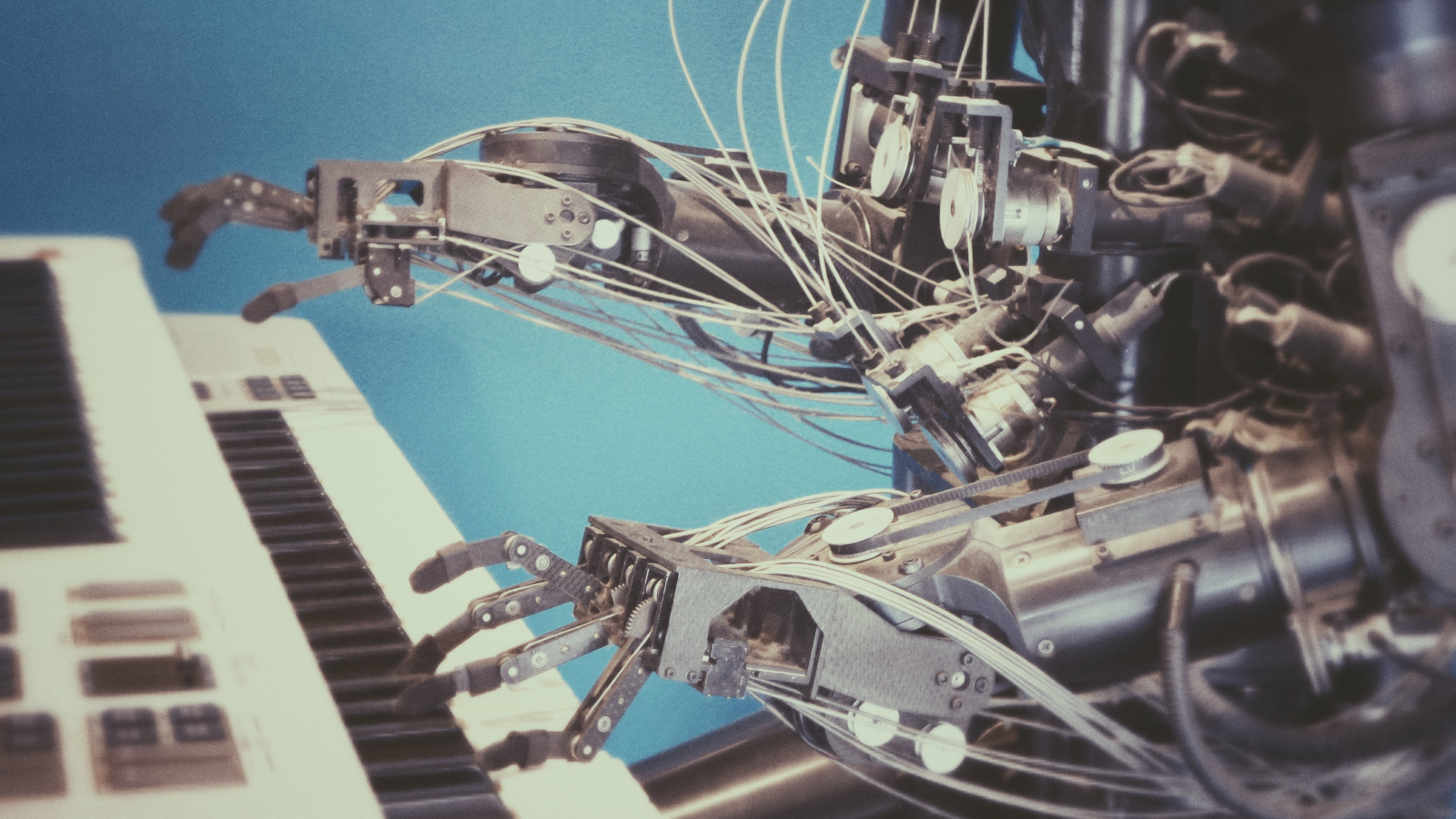

As AI becomes progressively more integrated in our everyday lives, it is not altogether improbable that these technologies might one day dominate in creative domains. Here, we’ll focus specifically on music. Can an algorithm ever attain the level of a human composer? Or will there always remain something indefinable and transcendent about a human creation, irreproducible by a machine imitator?

This post started life as a project for a data science ethics class. Together with my excellent collaborators Zach Murray and Kimon Vogt, we explored a tentative, prescriptive criteria for evaluating personhood in AI systems. Our original discussion, from which parts of this piece has been cribbed, can be found here.

A concert like no other

October, 1997. A crisp, autumn afternoon at the University of Oregon. Excited chatter bubbles from the growing crowd of students, professors, and music enthusiasts gathering at the concert hall, anticipating an hour of cerebral music and Baroque discussion. A sudden hush descends as esteemed pianist Winifred Kerner strides across the stage to the waiting Steinway.

She performed three pieces.

Only one was a true Bach.

In a musical showdown not dissimilar to the famous match between chess grandmaster Gary Kasperov and IBM’s Deep Blue, or the more recent headliner pitting Go master Lee Sedol against DeepMind’s AlphaGo, the Bach concert was organized as a competition between a human composer and a machine opponent. The contestants were as follows:

For the humans: Professor Steve Larson of the UO Music Department. Bach expert and music composer.

David Cope, creator of EMI. Source

For the machines: EMI (“Experiments in Musical Intelligence,” pronounced like “Emmy”). The brainchild of David Cope, a professor of algorithmic and computer music at UC Santa Cruz. EMI learns to imitate the style of various composers by studying their compositions.

Upon hearing about EMI, Larson took offense to the notion that a mere algorithm could approach the nuance and sublimity of a human composer. That EMI would dare to imitate of composer of Bach’s stature was nothing short of blasphemy. So Larson proposed a challenge. Three pieces would be performed before a public audience:

- A genuine Bach

- A composition by EMI, in the “style of Bach”

- A composition by Professor Larson himself

Then the audience, composed of music scholars, professionals, and students from the University of Oregon area, must vote on the authorship of each piece. The results were striking.

Listen to EMI for yourself! Click to hear

Much to Larson’s chagrin, the audience decided that EMI’s piece was the genuine Bach. Somewhat ironically, Larson’s own composition was mis-attributed to EMI. (As for the real Bach, it was mis-attributed to Larson.) In a remarkable triumph for computer-generated music, EMI duped an audience of experienced listeners into believing its music was written by a compositional master.1

When later interviewed about his creation, David Cope reflected that “when [listeners] assume the music is human, they are obviously moved and speak in the same terms as if it had been by Chopin [a famous composer]. But when I tell them that there is nothing behind the music but cold hard machinery doing addition and subtraction, then they won’t admit they were moved.”2 There seems to be something profoundly uncomfortable with attributing human qualities – artistry, creativity, emotion – to a machine-generated artifact. Why?

The Sims. Source

Perhaps listeners are uneasy with attributing emotional valence to a machine? And yet, if you’re old enough (but not too old) to have owned a Tamagotchi, you might recall how easy and natural it was to develop a real emotional bond with an entirely simulated pet. If you’ve ever played The Sims (or video games broadly), you might have experienced deep attachment and real emotional investment in the game characters. On an rational level, players may understand that the characters are nothing more than software abstractions. But it’s still easy to believe that a Sim experiences love, pain, hunger, excitement – the spectrum of human emotion – as evidenced by their interactions with the player.

Many recent examples in film (Her, Ex Machina, Blade Runner) and culture (fictosexuality, LaMDA) explore the deep emotional investment individuals can place in machine creations. So if people are generally okay with extending emotional currency to digital artifacts, what is it about EMI exactly that makes it hard for people to accept its music?

Human or machine?

Let’s try our own version of Larson’s contest.

Below are links to two different pieces. One was written by a human. The other was written by a machine. Listen to both, multiple times if you’d like, then decide which is which.

Which piece did you like better? Which sounded more “human”? Did you feel any emotions for either, or both? For the piece that sounds like a machine’s, what “gives it away”?

When you’re ready, scroll below the ACII art to find out the answer!

_____

| | \

| | \

| | \___

| | \

| | \

0 _|_|___________|

/\/ /____|____________)

. / \_|__________________|

|/__ | )( )(

| |\\ :| )( ejm 97 )(

,-.

((o))

_ ,-,,-'

( `/=//_

_ /=/='_)

( `/=//_

/=/='_)

/_/ |

/ /`.' ===========.

/ // ##--------^`

/ //

/ //

/ //

/ / |

_ / / -._

.' / / `.

/ / / `-.

/ /_/ ' `

' : '

'. ,- ,-'.:

/ / /. / ,-'

/ __ 7 / /

.,' ' ` ' `._

/ |/\| ,'.

/ _ _ / ,'

:' ` | / ,' /

`.PG |/ _ , ' ,'

---""__ ,-'

=_

||\

C C |||\

C c (\/ C (\/ ||||\O

(\/ (\/ /| (\/ c /| ||||\/\

/| /| / | /| (\/ / | ||||// \

/ | / | /__| / | /| /__| |||/ __\|

/__| /__| " /__| / | " |__: //| |

" " " /__| -

c ! " ( )

c (\/ \ -\

(\/ c /| ___ \O - \O

/| (\/ / | / \/|) ( & )/)

/ | /| /__| | ./||\ _____ -\/|\

/__| / | " \___/ | \ /\ | (_) | \

" /__| /|\ |__\ -- | |__\

" " /|\ "

O ______________

/\/ /________________) O @

/ \_|___O /___________| _|/_ \ O @

|/__ /_oo-#= | /( )_\ \-O \|_

| |\\ :|(/| \/ ;/_)_(_ \_\ O / |_\

||__ |O _( ) \| /| / ( )

O / | |\\ =#-@@| / / / __\| / | ;/__)_(

/_oo-#= \/ | //| | __|| ( )

| \/ __|| ___O //| | /_|_|

||__ ejm97 //| | \ \/ )>==O ' '

| |\\ | O \/)

__|| /| | .-O

-#----- //| | / | __|| (=) )

\ __|| //| | \(/|

_ _\O== //| | ___||

=#-(_X_)/) // | |

\/ |\

| \

|__\

"

All artwork are human generated. asciiart.eu

I have a confession to make – as it happens, both pieces are written by a machine! 3

But before you found it, did you genuinely believe one of the pieces was authored by a human? Does knowing the truth alter your listening experience?

“Real” music?

The modern-day successor to EMI is AIVA, which composed both pieces you listened to. The algorithm is designed by Aiva Technologies, a European startup that develops machine learning solutions for composing themed, cinematic music. As you may have experienced, AIVA’s music is often characterized as being emotional, coherent, and difficult to distinguish from a score written by a human composer. In fact, AIVA has been officially recognized as a composer by a European artists’ guild, the first algorithm to earn this distinction.

Yet however convincing the music sounds, it remains unalterable that AIVA is an algorithm. Under the hood, its compositions are produced by processes no more inspired or creative than the shifting of bits and adding of numbers. In contrast, a composer (or any artist, for that matter) seems closer to the divine. From the whispers of Muses, the mysterious Inner Spark, the deep sensitivity to all that is Beautiful and Sublime, the true Composer draws on any number of suitably mystical inspirations to weave subtle melodies uniquely capable of touching the soul. Surely, they are a world apart from anything as mundane as a simple computer?

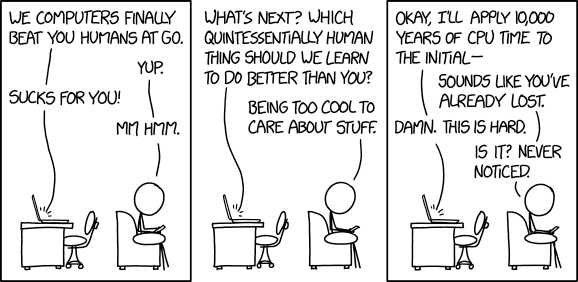

Perhaps the root of the discomfort in regarding EMI and AIVA as true musicians rests in the elevated status of music, and art broadly. As creative endeavors, we hold artistic works as the prime example of human sensitivity and achievement. To grant equal status to a machine would discount the experience for both artists and audiences alike. If a machine can do it, what’s so special about the human artist? In this sense, the debate over EMI and AIVA stems not from a critique over the quality of their output, but from the danger they represent to the art community.

It doesn’t have to be this way. There is growing momentum among composers (and tech companies) to incorporate AI and machine learning in the creative pipeline. Google’s open source platform Magenta offers a suite of tools aimed at complementing the creative process, rather than replacing it.4 Stuck on a melody? Use MusicVAE to come up with additional ideas. Struggling to nail down that exact synth sound you want? NSynth is here to help. I’ve experimented in this space myself, building an AI-assisted tool for live coding music performances. Developing creative aids like these is virtually unthinkable without modern AI techniques. With these tools in hand, AI-as-composer-aid is well positioned to leverage the strengths of AI-driven composition, without wholly sacrificing the human artist.

Short clip about NSynth, from Magenta. Click to view

Composers of tomorrow

What’s after AIVA? As these technologies improve, it is likely the AI composers of the future will become ever more compelling in their work. Will we ever reach a point where an AI composer gains widespread acceptance? Could an AI-composed piece make it on a Top 40 chart? Where would that leave human artists?

Perhaps in the future, music might be partitioned into two new categories: “standard” and “artisanal.” Standard music is written by AI. Press a button on a computer and presto! An instant hit. No whiny artists to pay, no lengthy wait for new music, a studio can produce quality music quickly and cheaply. And heck, if it sounds good, why not?

For listeners who remain resolutely bothered by AI composers, there is the second category. As the name suggests, “artisanal” music is still composed by a living, breathing artist. It’s more expensive to produce, and the quality might not match the carefully-tuned perfection achievable by a machine, but it nonetheless commands a premium for that indefinable “human touch.”

In the not-that-far future, we may arrive at a coexistance between machine and human artistry, but other questions remain. Highlighting a few:

- Credit: algorithms like AIVA must have a training dataset in order to learn how to compose music. Typically, the pieces in the training set were written by humans. If AIVA composes a new piece, does the credit go to the algorithm, or to the artists in the training set? Before you answer, consider that AIVA could very well be copying entire measures directly from its training set, with most listeners none the wiser. Before you answer again, consider that human musicians also learn from a training set, and occasionally copy entire measures directly from their training set, with most listeners none the wiser.

- Copyright: does an algorithm own the pieces it composes? Or do they belong to the company that made them? In one striking court case, the Federal Court of Autralia ruled that a particular piece of source code could not be copyrighted by the company because it was generated by a software agent, not a human author. Does a company have any more right over music that it didn’t compose? Can an algorithm claim meaningful ownership over its creations?

- Personhood: as our algorithms become progressively more sophisticated, will they ever gain a measure of autonomy, or even sentience? This is a gnarly can of worms to open. If you’re interested, please do refer to the original essay that inspired this post, where this issue is explored somewhat more deeply.

Recommended readings

Looking for more? Check out the following resources if you want to…

-

start building something! The Magenta docs and some of the literature in the field are a great place to start. Also, music21 is an excellent Python library for experimenting in algorithmic music composition.

-

gripe about the intrusion of AI in music You might find a kindred soul in Walter Benjamin, who grappled with related issues around the relationship between art and technology.

-

learn more about broader issues around AI, creativity, and personhood This review is a great place to start. You might also be interested in Edmond de Belamy, a striking application of AI to portraiture.

-

In a related vein, a few years ago, Google released an AI capable of composing Bach-like music as a Doodle. The underlying algorithms are entirely different, but the spirit is much the same. ↩︎

-

https://archive.nytimes.com/www.nytimes.com/library/cyber/week/111197music.html ↩︎

-

“Piece A” is “A Bucket of Spring”. “Piece B” is “Evening Star”. Both pieces are composed by AIVA. If you liked them, more are readily accessible on YouTube and Spotify. ↩︎

-

I am in no way endorsed by Google or the Magenta team. ↩︎