bayz: live coding + bayesian program learning

Introducing bayz: a live coding platform that combines the latest and greatest

in Bayesian machine learning with uber nerd avant-garde performative music.

Audience: Professor Mark, and anyone else who stumbles here

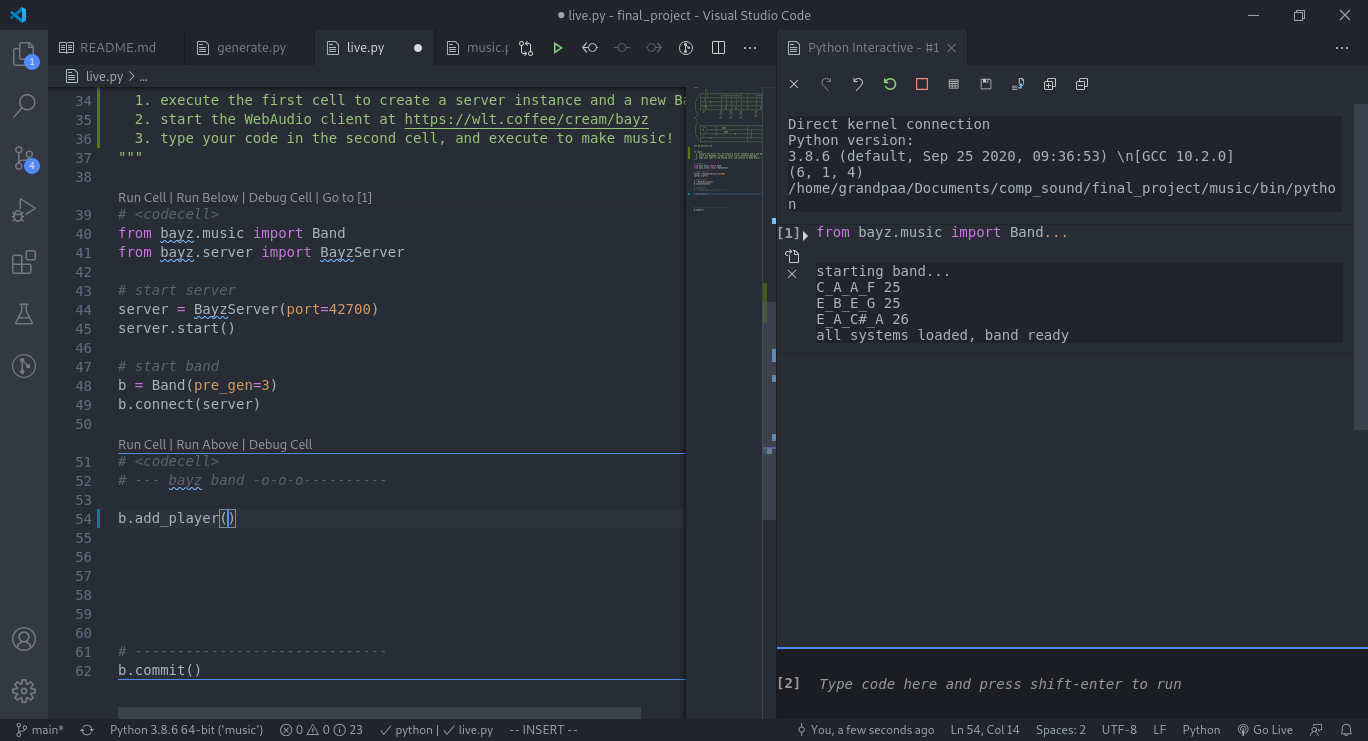

bayz band interface in vscode

For the impatient

- A GitHub repo with everything you need to get started: wtong98/bayz

- A publicly hosted bayz beat client: bayz-beat

- this client connects to

localhost:42700, the default port for a bayz server instance

- this client connects to

Background

bayz is the child of two very different fields: Baysian Progream Learning (BPL) and live coding.

BPL is a relatively recent1 development in machine learning that seeks to capture high-level aspects of human cognition. Its authors hypothesize that human cognition stems from three basic ingredients:

- Causality: we perceive things in cause-and-effect relationships

- Compositionality: larger concepts are composed of smaller, more basic concepts

- Learning-to-learn: as we learn, we learn how to get better at learning

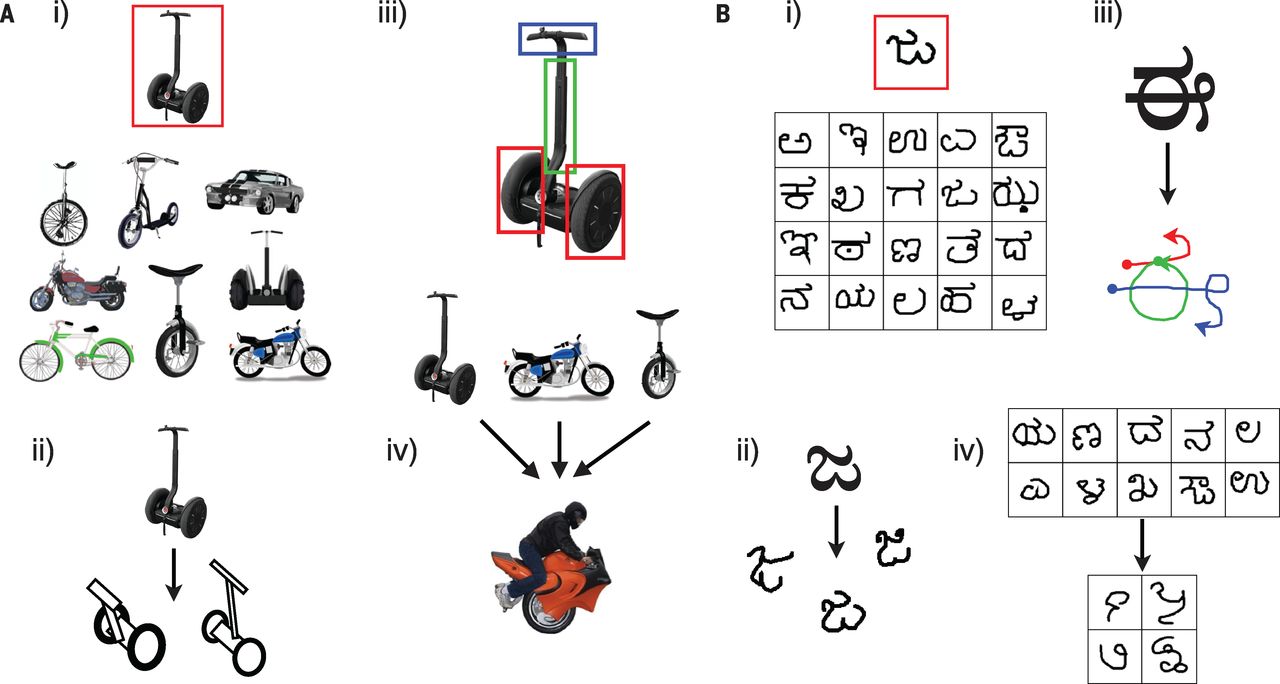

Figure from the original paper that highlights the core cognitive process modeled in BPL. From the paper: “A single example of a new concept (red boxes) can be enough information to support the (i) classification of new examples, (ii) generation of new examples, (iii) parsing an object into parts and relations (parts segmented by color), and (iv) generation of new concepts from related concepts.” By using the three aspects above, humans have a remarkable ability to learn novel concepts from one or few examples. BPL tries to do the same. (Source: Lake et. al 2015)

They took these three ingredients and smooshed them into one grand machine learning framework: Bayesian Program Learning. The original paper applied the framework to character recognition, but it generalizes naturally to other contexts — like music composition. So a friend and I got together, hashed out a way make BPL do music, and coded up BayesClef.2

Live coding is a form of performative music that turns programming into an instrument. It involves, well, coding live. Performers will often project their code on a screen as they write it, improvising algorithms that generate music. It’s a unique blend of technology and creativity, taking very literally the notion that software is art.

So what if we took all the music-compositional-awesomeness of BPL, and brought it to a live-coding platform? Instead of improvising tunes on the spot, a performer could rely on the machine to generate perfect notes for them. Instead of worrying about the nitty-gritty details of the music, the performer can work on the broad-strokes, shaping the music at a higher level. The live coder becomes a live conductor, directing the efforts of a virtual, artificially intelligent orchestra.

bayz

bayz3 is a live coding platform that brings BPL to live coding. The platform is implemented in Python4, and the full details for getting started can be found on the GitHub repo.

Architecture

bayz is composed of three parts:

- bayz band: live coding interface

- bayz beat: WebAudio client, for producing sound

- bayz server: server that connects the two pieces above

bayz band is a simple jupyter notebook interface that allows a live coder to input instructions and produce sound. The example notebook included in the repo additionally sets up a server instance, which passes messages between bayz band and bayz beat.

bayz beat is a client that uses WebAudio to produce the actual sounds. The relevant html for bayz beat is included in the GitHub, but for convenience the website is also hosted here. Ensure you have a bayz server instance running locally and listening on port 42700 (the default), hit start, and you’re good to go!

Hit start!

Making music with bayz band

bayz band is organized around the concept of a Band. In the spirit of the algorithm-as-musician, you the live coder become the “conductor” of this Band.

The example jupyter notebook starts by initializing a Band

b = Band(pre_gen=3)

the argument pre_gen tells the Band to go ahead and sample three pieces of

music from the BPL model and prepare them to be played. Ideally, sampling

would happen in real time, but given the limitations of a Python

implementation, it doesn’t quite happen fast enough. So instead, will sample

a few pieces ahead of time, and play them when the time comes.

Once that finishes (it may take a few moments), go ahead and try adding a player to your Band

b.add_player()

Hit <ctrl>-<enter> to execute the notebook cell and viola! We have music!

Add as many players as you like

b.add_player()

b.add_player()

b.add_player()

b.add_player()

b.add_player()

# ...

but be warned, if you add more than the number in pre_gen, any extras will

need to first sample music from the BPL model, which will slow down your flow

considerably.

If you feel the need to take a more direct hand in your music, you can also add a custom line of music

b.add_line([60, 63, 67])

which will play an arpeggiated minor C chord. The integers refer to midi values.

If you’d like to vary the rhythm, you can also specify a rhythm list:

b.add_line([60, 63, 67], rhythm=[1,2,3])

which gives the relative duration of each note. Hence, 60 will be played half

as long as 63, and a third as long as 67. The length of the rhythm list

doesn’t have to match the length of the notes list

b.add_line([60, 63, 67], rhythm=[1,2])

In this case, the rhythms cycle back, so 60 has length 1, 63 has length 2,

and 67 has length one again.

If you’re tired of the default sound, you can try a new instrument:

b.add_line([60, 63, 67], rhythm=[1,2], instrument='bell')

which will produce a church-bell-esque sound, or

b.add_line([60, 63, 67], rhythm=[1,2], instrument='warble')

which will give you a somewhat haunting vibrato.

Both the rhythm and instrument keywords also work with add_player().

Remember to re-execute the cell every time you make a change. Give a moment for the changes to propagate, sync, then before long, you have new music!

Looking ahead

bayz is still extremely new, highly experimental software (like pre-pre-pre alpha v0.0.0.0.1), so there’s still plenty of work to do before it’s ready for the algoraves. Some nice-to-haves for a future iteration might include a

- C implementation of the BPL model, so we can achieve realtime sampling

- model of interactions between players

- additional genres of music in the training data5

- bayz beat visuals that sync with the music

- anything else that sounds awesome!

Please enjoy, make it your own, and send me a PR if you find something buggy (inevitable) or come up with something new (very much appreciated).

-

Circa 2015, which I guess depending on your point of view, is either blindingly recent measured in academia years, or eons ago measured in ML years. ↩︎

-

If you’re curious about the specifics, our original writeup is linked here and music samples can be found here. ↩︎

-

For the record, “bayz” should be spelled all lowercase. Not “Bayz,” “BAYZ,” “BayZ,” and definitely not “bAYz.” ↩︎

-

Need a brush up on some Python? Forget the difference between lists and tuples? Worry not! I’ve got you covered. ↩︎

-

The current BPL model is trained on a corpus of about 360 pieces by Bach. Why him? It was the most accessible corpus in the format I needed. Plus, Bach sounds surprisingly good in an electronic music context. If he were alive today, I have full faith he would be an electronica artist. ↩︎